o3 is here, is this AGI?

☕️ 🤖 It's not but this is definitely different

OpenAI has been doing their 12 days of OpenAI as part of their Christmas release/celebration. For the most part it’s been largely forgettable up until the last day.

On the 12th day they previewed their o3 model. This model has clearly set the new standard in benchmarks related to AGI. The most interesting is the write up done on the arcprize blog → https://arcprize.org/blog/oai-o3-pub-breakthrough by François Chollet.

In this post I’ll be unpacking this post and diving into this new territory and share my opinions on what I think it means.

Results & Observations

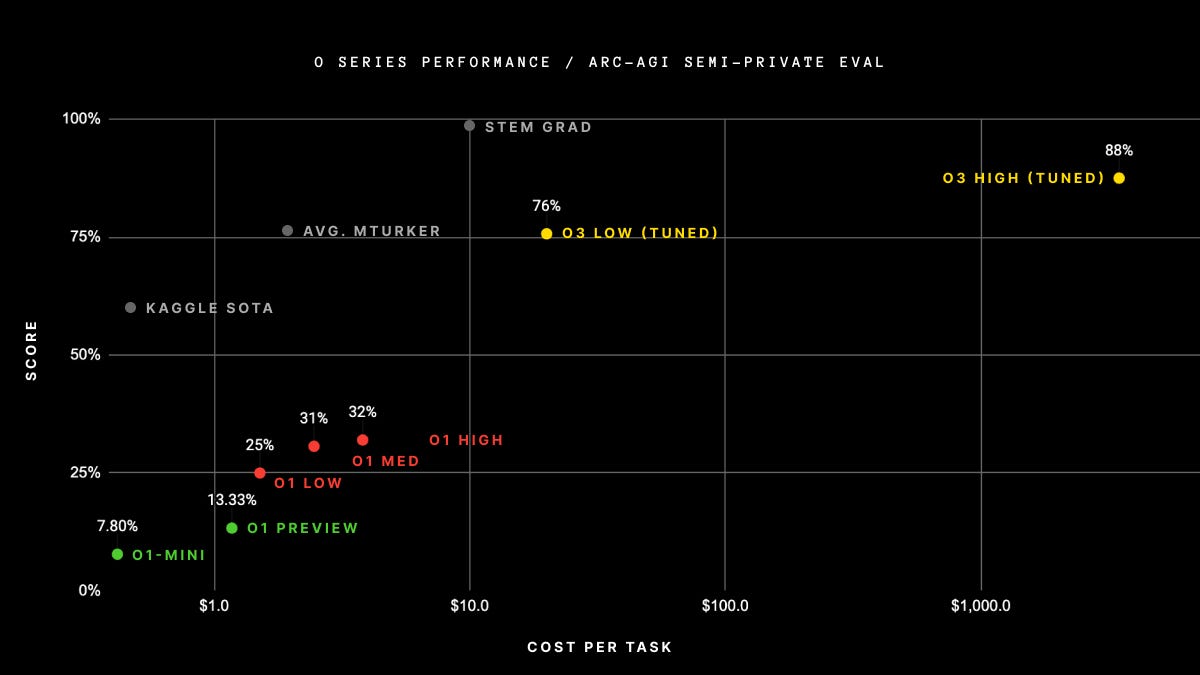

OpenAI's new o3 system - trained on the ARC-AGI-1 Public Training set - has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.

A few observations worth discussing. The first one that’s incredible is the amount of compute thrown at this for the high compute o3 configuration. High compute rough cost is 172 x $6,677 = $1,148,444! The high compute (low efficiency) configuration scored the highest across both evaluation sets — 87.5%(Semi-Private) and 91.5%(Public). The difference in high efficiency and low efficiency gives you roughly a 10% increase in scores. That extra 10% in performance costs over a million dollars to get!

Is this AGI?

No, but it is the closest thing we know of according to the benchmarks. This is absolutely a giant leap and feels like a genuine breakthrough according to benchmarks and everything I’ve read. It’s important to note that GPT4’s best score against the ARC-AGI benchmark was 5%. The o1 model got 18%. Clearly, this o3 model is different. I’m not even sure this counts as a model anymore. This feels like something different altogether.

François Chollet goes onto explain what they believe is going on under the hood. Of course, this is only speculation at this point.

Effectively, o3 represents a form of deep learning-guided program search. The model does test-time search over a space of "programs" (in this case, natural language programs – the space of CoTs that describe the steps to solve the task at hand), guided by a deep learning prior (the base LLM).

The post closes with this statement.

o3 fixes the fundamental limitation of the LLM paradigm – the inability to recombine knowledge at test time – and it does so via a form of LLM-guided natural language program search. This is not just incremental progress; it is new territory, and it demands serious scientific attention.

So all that being said, it’s hard to know what exactly is happening here. OpenAI is of course closed source and seems to have zero intention of releasing anything for open source supporters like myself to look at.

This leap in technology I don’t think many planned or saw coming this soon. This is a hell of a way to end 2024 and start 2025.

Does this matter to you and I?

Obviously this is a research model and is not production ready. If the production version was released though, would this make a difference to you and I? I’m a regular old software engineer by many measures and so I’m always trying to understand how these types of break throughs will impact me and my everyday life.

It’s hard for me to say exactly but the cost around this model still seems extremely expensive for everyday usage. Plus my money is more on agents in 2025 than new models breaking through. I guess Anthropic released their computer use model that did some interesting things. From the demo done by OpenAI it seems that o3 was performing actions on their computer. Perhaps more and more models will have computer use built into them in the future.

If computer use expands and takes over the agent space then I think this type of AGI level capabilities really start to become much more disruptive to workers like myself and likely you.

Security & Safety

I keep harping on this but OpenAI doesn’t seem to care at all about security of users data and the safety as well. So I think it’s important for folks to be extremely careful when it comes to using any of their offerings. They can and are using your data for improving their technologies. The safety of these systems is also a large unknown and needs to be considered thoughtfully and carefully.

AGI Open Source Future

I still believe that the democratization of models through open source is the best future outcome we could hope for. The ARC Prize is committed to that mission it seems and should be used as the guiding benchmark for assessing if we are there or not with respect to AGI.

ARC Prize is a $1,000,000+ public competition to beat and open source a solution to the ARC-AGI benchmark.

In my opinion, 2025 will still be the year of agents. That said, it looks like we have some new territory to explore when it comes to AGI and it seems like this space will improve much faster than originally expected. Perhaps AGI and Agents coming together in 2025. It’s looking like its going to be a big year in tech once again.

Let’s connect!

Thanks so much for reading! If you liked this then please let me know down below. I’m also looking to collaborate more with others on Substack. So if that’s you then please reach out to me!