True AI Agents are closer than we all think

Models can use computers like the rest of us now

Claude 3.5 Sonnet can now use computers like the rest of us. It’s incredible to me that we are operating at this level for agents already but here we are. In this post I take a look at the article and demo done from Anthropic which you can find here.

So what did they do?

Anthropic released a new version of their Claude 3.5 Sonnet model and they also trained it to basically use a computer. It’s pretty interesting how they did this. Rather than me botch this, here it is in their own words.

Our previous work on tool use and multimodality provided the groundwork for these new computer use skills. Operating computers involves the ability to see and interpret images—in this case, images of a computer screen. It also requires reasoning about how and when to carry out specific operations in response to what’s on the screen. Combining these abilities, we trained Claude to interpret what’s happening on a screen and then use the software tools available to carry out tasks.

Stepping back and seeing the steps they took it honestly feels like a no brainer in hindsight. These models had many of the features necessary to do this so rather than trying to build some large agent framework and get developers using it they sort of just skipped that step.

Literally, last week I was thinking that it would be good to form an open standard around an agent frameworks that all companies could use to have their models communicating with one another. Then this gets released and it feels like why even bother with all that when you can train a model to use a computer like the rest of us.

That said, Anthropic admittedly said that this is very much a beta and sometimes it does some random things as its trying to reason about a task.

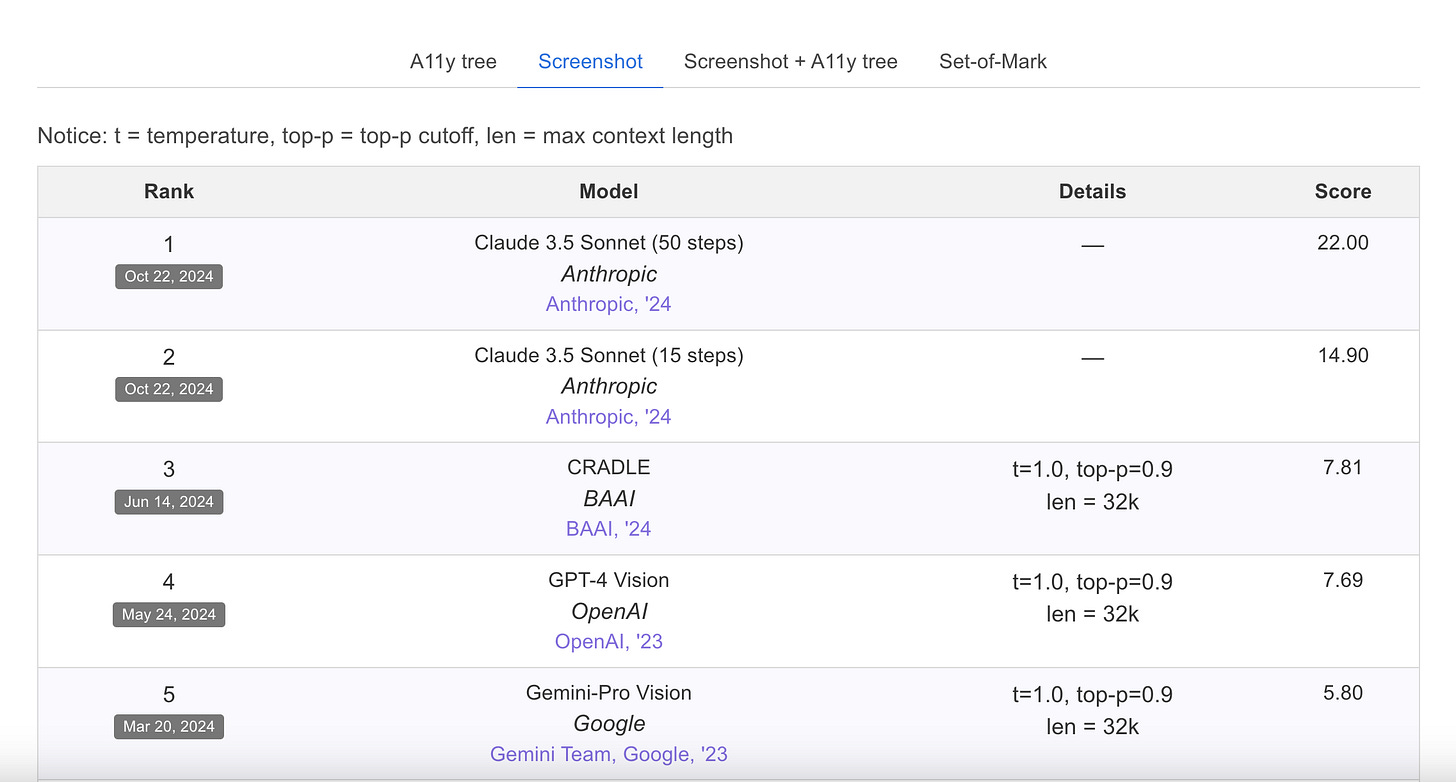

Also there is a benchmark for measuring just how good an agent is at completing real computer environment tasks called OSWorld and according to their “Screenshot” category Claude 3.5 wins by a wide margin. It gets a score of 14.9% in 15 steps and 22% in 50 steps. The article references that human level skill is ~75% so still a bit off but I’m sure it’ll improve fast.

Is this a good idea?

I don’t know how to feel about this type of progress. On one hand it’s absolutely incredible that all of this is happening. On the other I can’t help but wonder if this is a terrible idea. I love the idea of something I can delegate annoying tasks to but a lot of these tasks would be for pretty important things like my finances, health, and all that requires passwords.

This is data that I never would have exposed in this way had it not been for the promise of these models helping with some task. This is also data I only trust to certain institutions. Even then, they get it wrong sometimes. I guess I’m just not a fan of all this data being handled by one or two model providers. Also the models act on the data and the data isn’t encrypted in any way.

In the end I’m not sure this is the best idea. I’m all for progress but it seems like we need to figure the security aspect of all this out first but I’m sure other folks will be fine with it and things will continue to move forward. I expect this all to move pretty quick.